| Россия, Москва, Московский государственный университет им. М. В. Ломоносова, 1989 |

The Vinum Volume Manager

Creating Vinum drives

Before you can do anything with Vinum, you need to reserve disk space for it. Vinum drive objects are in fact a special kind of disk partition, of type vinum. We've seen how to create disk partitions on page 215. If in that example we had wanted to create a Vinum volume instead of a UFS partition, we would have created it like this:

8 partitions: # size offset fstype [fsize bsize bps/cpg] c: 6295133 0 unused 0 0 # (Cyl. 0 - 10302) b: 1048576 0 swap 0 0 # (Cyl. 0 - 10302) h: 5246557 1048576 vinum 0 0 # (Cyl. 0 - 10302)

Starting Vinum

Vinum comes with the base system as a kld. It gets loaded automatically when you run the vinum command. It's possible to build a special kernel that includes Vinum, but this is not recommended: in this case, you will not be able to stop Vinum.

FreeBSD Release 5 includes a new method of starting Vinum. Put the following lines in

/boot/loader.conf:

vinum_load="YES" vinum.autostart="YES"

The first line instructs the loader to load the Vinum kld, and the second tells it to start Vinum during the device probes. Vinum still supports the older method of setting the variable start_vinum in /etc/rc.conf, but this method may go away soon.

Configuring Vinum

Vinum maintains a configuration database that describes the objects known to an individual system. You create the configuration database from one or more configuration files with the aid of the vinum utility program. Vinum stores a copy of its configuration database on each Vinum drive. This database is updated on each state change, so that a restart accurately restores the state of each Vinum object.

The configuration file

The configuration file describes individual Vinum objects. To define a simple volume, you might create a file called, say, configl, containing the following definitions:

drive a device /dev/da1s2h volume myvol plex org concat sd length 512m drive a

This file describes four Vinum objects:

- The drive line describes a disk partition (drive) and its location relative to the underlying hardware. It is given the symbolic name a. This separation of the symbolic names from the device names allows disks to be moved from one location to another without confusion.

- The volume line describes a volume. The only required attribute is the name, in this case myvol.

- The plex line defines a plex. The only required parameter is the organization, in this case concat. No name is necessary: the system automatically generates a name from the volume name by adding the suffix .px, where x is the number of the plex in the volume. Thus this plex will be called myvol.p0.

- The sd line describes a subdisk. The minimum specifications are the name of a drive on which to store it, and the length of the subdisk. As with plexes, no name is necessary: the system automatically assigns names derived from the plex name by adding the suffix .sx, where x is the number of the subdisk in the plex. Thus Vinum gives this subdisk the name myvol.p0.s0

After processing this file, vinum(8) produces the following output:

vinum -> create config1 1 drives: D a State: up /dev/da1s2h A: 3582/4094 MB (87%) 1 volumes: V myvol State: up Plexes: 1 Size: 512 MB 1 plexes: P myvol.p0 C State: up Subdisks: 1 Size: 512 MB 1 subdisks: S myvol.p0.s0 State: up D: a Size: 512 MB

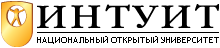

This output shows the brief listing format of vinum. It is represented graphically in Figure 12-4 .

This figure, and the ones that follow, represent a volume, which contains the plexes, which in turn contain the subdisks. In this trivial example, the volume contains one plex, and the plex contains one subdisk.

Creating a file system

You create a file system on this volume in the same way as you would for a conventional disk:

# newfs -U /dev/vinum/myvol /dev/vinum/myvol: 512.0MB (1048576 sectors) block size 16384, fragment size 2048 using 4 cylinder groups of 128.02MB, 8193 blks, 16512 inodes. super-block backups (for fsck -b #) at: 32, 262208, 524384, 786560

This particular volume has no specific advantage over a conventional disk partition. It contains a single plex, so it is not redundant. The plex contains a single subdisk, so there is no difference in storage allocation from a conventional disk partition. The following sections illustrate various more interesting configuration methods.

Increased resilience: mirroring

The resilience of a volume can be increased either by mirroring or by using RAID-5 plexes. When laying out a mirrored volume, it is important to ensure that the subdisks of each plex are on different drives, so that a drive failure will not take down both plexes. The following configuration mirrors a volume:

drive b device /dev/da2s2h

volume mirror

plex org concat

sd length 512m drive a

plex org concat

sd length 512m drive b

In this example, it was not necessary to specify a definition of drive a again, because Vinum keeps track of all objects in its configuration database. After processing this definition, the configuration looks like:

2 drives: D a State: up /dev/da1s2h A: 3070/4094 MB (74%) D b State: up /dev/da2s2h A: 3582/4094 MB (87%) 2 volumes: V myvol State: up Plexes: 1 Size: 512 MB V mirror State: up Plexes: 2 Size: 512 MB 3 plexes: P myvol.p0 C State: up Subdisks: 1 Size: 512 MB P mirror.p0 C State: up Subdisks: 1 Size: 512 MB P mirror.p1 C State: initializing Subdisks: 1 Size: 512 MB 3 subdisks: S myvol.p0.s0 State: up D: a Size: 512 MB S mirror.p0.s0 State: up D: a Size: 512 MB S mirror.p1.s0 State: empty D: b Size: 512 MB

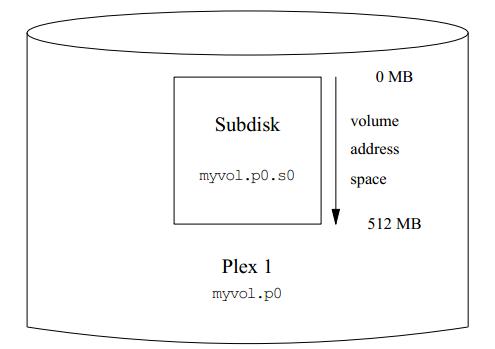

рис. 12.5shows the structure graphically.

In this example, each plex contains the full 512 MB of address space. As in the previous example, each plex contains only a single subdisk.

Note the state of mirror.p1 and mirror.p1.s0: initializing and empty respectively. There's a problem when you create two identical plexes: to ensure that they're identical, you need to copy the entire contents of one plex to the other. This process is called reviving, and you perform it with the start command:

vinum -> start mirror.pl vinum[278]: reviving mirror.p1.s0 Reviving mirror.p1.s0 in the background vinum -> vinum[278]: mirror.p1.s0 is up

During the start process, you can look at the status to see how far the revive has progressed:

vinum -> list mirror.pl.s0 Smirror.pl.s0 State: R43% D: bSize: 512 MB

Reviving a large volume can take a very long time. When you first create a volume, the contents are not defined. Does it really matter if the contents of each plex are different? If you will only ever read what you have first written, you don't need to worry too much. In this case, you can use the setupstate keyword in the configuration file. We'll see an example of this below.